I just noticed this yesterday when I was using Stellarium Web Planetarium to see where the Moon and planets were in the sky in relation to the constellations at the time I was born. Here are the screenshots of the sky on December 28, 1987 at 17:15 (5:15pm):

Strange. I always thought my Moon was in Aries. I had to double check.

Right away, the information does not match Stellarium. It shows both the Moon and Jupiter in ARIES, not in Pisces. Also different, is Venus showing in Aquarius not Capricorn. WTF

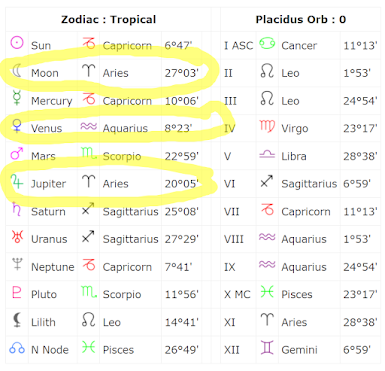

I went to cafeastrology.com to get my free astrology birth chart report which shows a complete report of what the entire sky was up to when I was born. Here's the report:

Right away, the information does not match Stellarium. It shows both the Moon and Jupiter in ARIES, not in Pisces. Also different, is Venus showing in Aquarius not Capricorn. WTF

What is going on here? one is wrong. Or am I missing something? Am I not supposed to compare astrology charts to the actual sky? Are the astrology positions different from the observable sky aka Stellarium? Or is stellarium wrong in its past record process? Which representation is the actual sky when I was born? If both of these are the only ways to know, how do I know which one is the correct one?? How would we EVER know?? I'm so confused.